If your needs to download a file are more simplistic, you can probably use the other methods mentioned on this thread, or the linked thread. The details of DownloadFileRequiringHeadersAndCookies are here. Var cookieContainer = new CookieContainer() ĬookieContainer.Add(new Cookie(cookie.Name, cookie.Value, cookie.Path, cookie.Domain))

Populate the Cookie Container like this: private CookieContainer BuildCookieContainer(IEnumerable cookies) NEED THIS TIMEOUT TO KEEP THE BROWSER OPEN WHILE THE FILE IS DOWNLOADING!Īwait page.WaitForTimeoutAsync(1000 * configs.DownloadDurationEstimateInSeconds) Var cookieContainer = BuildCookieContainer(pageCookies) Īwait DownloadFileRequiringHeadersAndCookies(getUrl, fullPath, cookieContainer, cancellationToken) Īwait page.ClickAsync("button") Var pageCookies = await page.GetCookiesAsync()

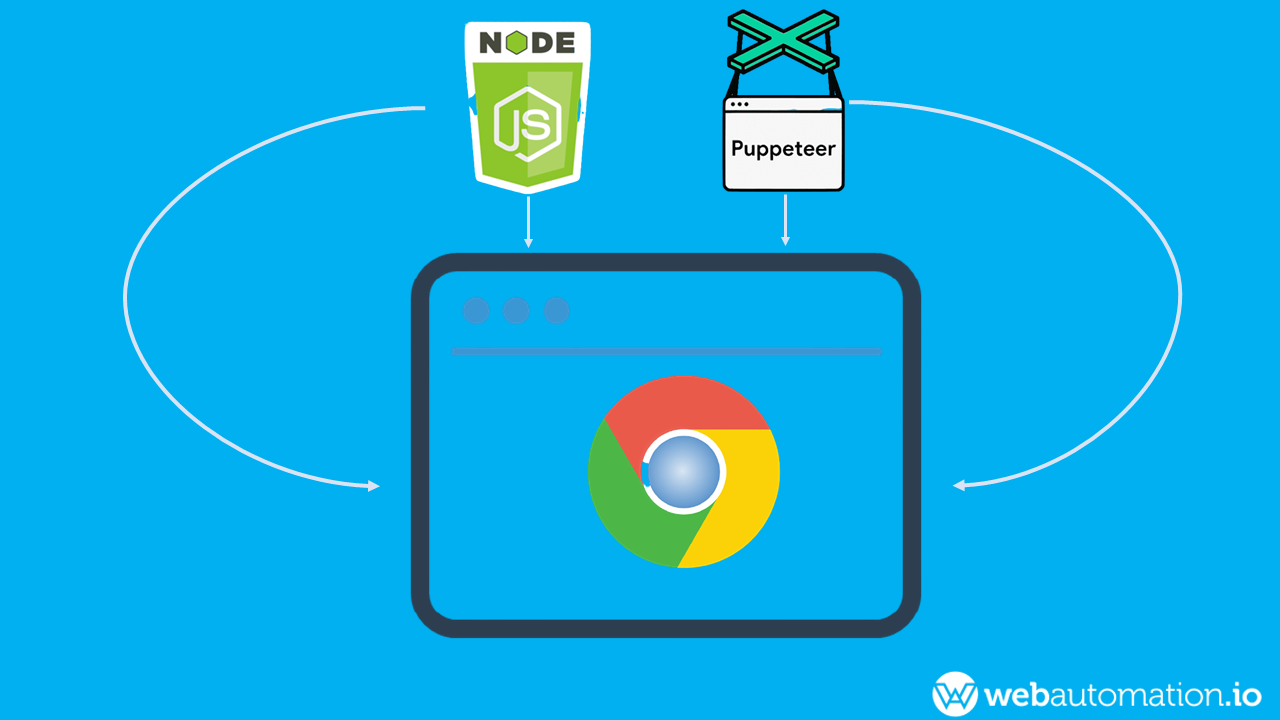

Add the cookies to a container for the upcoming Download GET request If (contentType.Contains("application/vnd.ms-excel")) Handle the response with the Excel download Page.Response += async (sender, responseCreatedEventArgs) => Handle multiple responses and process the Download await using (var browser = await Puppeteer.LaunchAsync(new LaunchOptions ))Īwait using (var page = await browser.NewPageAsync()) 2 comments thesam commented on Puppeteer version: 10.4.0 Platform / OS version: Ubuntu 21.Once I had that particular response, I had to attach headers and cookies for the remote server to send the downloadable data in the response. 83.2k Code Issues Pull requests 7 Actions Security Insights New issue PUPPETEERDOWNLOADPATH does not support relative paths 7592 Closed thesam opened this issue on In essence, before the button click, I had to process multiple responses and handle a single response with the download. I needed both Headers and Cookies set before the download would start. You should find Puppeteer executes successfully, provided proper Chrome flags are used.I had a more difficult variation of this, using Puppeteer Sharp. Chrome will write into /tmp instead.Īdd your JavaScript to your container with a COPY instruction. disable-dev-shm-usage – This flag is necessary to avoid running into issues with Docker’s default low shared memory space of 64MB.If you’re uncomfortable with this, you’ll need to manually configure working Chrome sandboxing, which is a more involved process. It’s vital you ensure your Docker containers are strongly isolated from your host. Using these flags could allow malicious web content to escape the browser process and compromise the host. no-sandbox and disable-setuid-sandbox – These disable Chrome’s sandboxing, a step which is required when running as the root user (the default in a Docker container).Setting this flag explicitly instructs Chrome not to try and use GPU-based rendering. disable-gpu – The GPU isn’t usually available inside a Docker container, unless you’ve specially configured the host.

0 kommentar(er)

0 kommentar(er)